The Gulf of Execution in the Age of AI Agents

If your process is broken, AI is not going to fix it.

I went on a cruise to the Bahamas for the holidays. I decided I would take a ride share for short trips between the airport, cruise port, and hotel. However I had a spare day at Miami after the cruise and decided to go to Everglades National Park.

So, I needed a car for the day. I used a major rental car provider’s website to book a car as my past experiences with the rental company were good. I filled out the online reservation and submitted it. It gave an error and asked to try again after a few minutes. I did and it errored again. In the third try, I changed the pickup time and location and tried again. It worked.

Done right? I was wrong. When I checked my email, I found I had three rental confirmations and a triple charge on my credit card. I thought to myself - oh no, not the red phone fiasco again.

I tried to cancel online and it promptly applied a cancel fee. Then, I created a ticket with the company giving them specific details on which ones to cancel. Someone responded that I must call them to cancel. I called them the next day and a few robot prompts later, I was able to speak to a human. After I explained the situation, they verified the reservation and cancelled the other two.

Why did I end up with three reservations? When I tried the first two times, it looked like the system had crashed. A “Unknown error” popped up, asking me to retry. But behind the scenes, the story was different. The system had actually finished the reservation successfully. The only thing that failed was the post to the confirmation screen—the system just didn’t respond with “Success” fast enough, so it timed out making the front end think something went wrong in the backend.

Can this be fixed in the Agentic Universe?

I have developed a nasty habit of projecting every current system problem into the agentic world. Given that the tech industry is obsessed with “agents,” would it solve for the end user? Sure, enterprises are building agents to capture new markets, increase productivity, and reduce costs, but what is the value transfer to end users?

First, lets see what the experience should look like. The booking experience could have been better. Here is what Brian Chesky’s Experience Rating levels (used to be 7 stars and now it is 11 stars) would have looked like for this scenario:

3 Star Experience: You visit the website, select dates, filter for “SUV”, manually enter your name, address, and credit card number. You hit submit. It books the car. This is the standard web 2.0 flow.

5 Star Experience: You open the rental app. You give minimal information and use Face ID to pay. Confirmed in 10 seconds. The backend is still shared so the issues with 3-star are still there.

7 Star Experience (The Agentic Experience): You just tell your AI, “I’m going to Miami.” It knows you need a car. It asks few details and preferences for you, and simply pings you: “I have booked a car for you.” You break free from rigid experiences.

9 Star Experience (The Personalized Agentic Experience): Your personal agent assists you in booking a car. It takes your booking by voice. The agent just confirms your preferences and seeks approval. It lets you customize the booking, watch for deals, and make changes on the fly.

11 Star Experience (The pseudo* AGI Experience): You don’t even ask. Your AI notices you booked a flight yesterday, checks your loyalty status, and puts a hold on your preferred car. It knows you disembark at Miami but fly out from Fort Lauderdale, so customizes the pickup and drop location that is closer to you. When you disembark, you get a notification that the rental car is ready for pickup.

*psuedo AGI - I personally think AGI is a long way. So until then the best bet is to mimic.

The reality

We are still stuck in a 3-star world - broken processes, complex interfaces, too many human interventions - when we believe we have the technology for 7/9/11-star agentic experiences.

Unfortunately, we will be stuck for a while. Companies have spent decades building their current processes, apps, and operations. The path forward for them is not about starting fresh - it’s about working around their legacy debts. That’s why most AI agents today feel more like fancy chatbots than actual enablers.

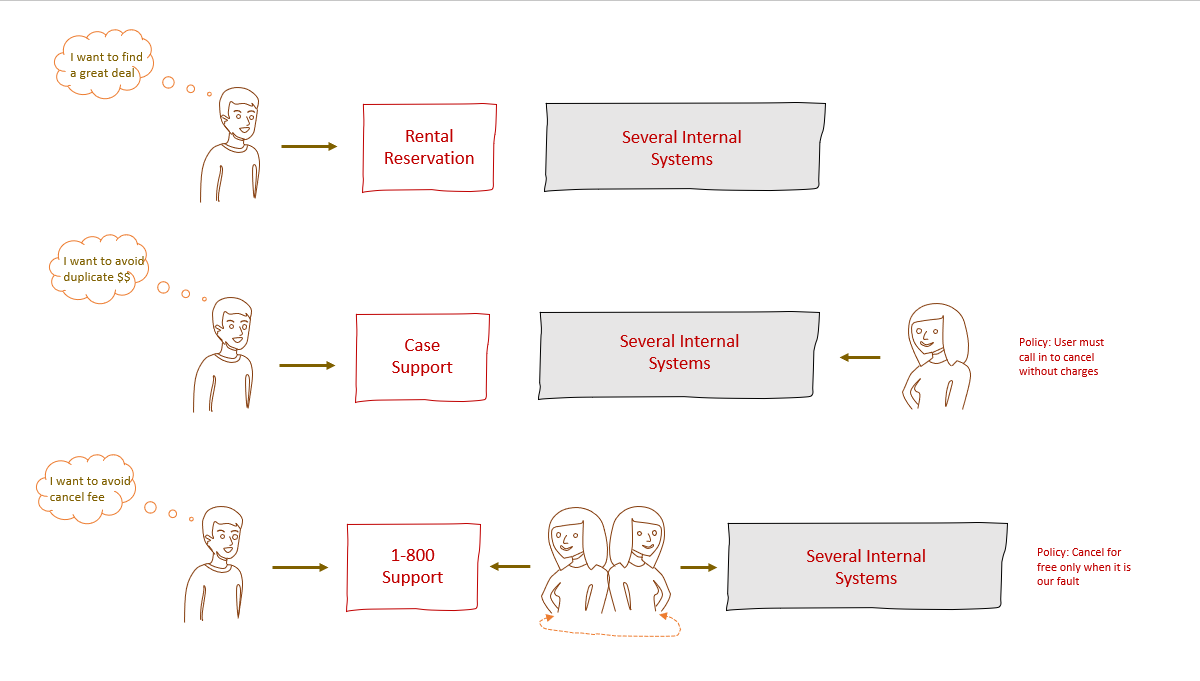

In this whole episode, I was involved in three different experiences - the UI experience with reservations, then with the case support, and finally with the phone support. I spent around 3 hours including context switches.

Current World Process Map

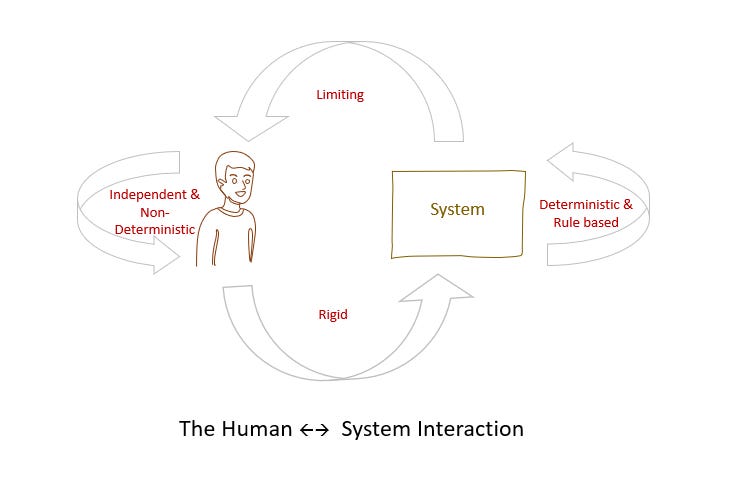

The existing processes are designed for human ←→ system transactions. When systems are involved, the transactions are not agentic; they are 100% programmed and deterministic between systems and humans. Then, when you add an agent to the mix, they struggle to work around the old world inefficiencies and gaps.

This diagram captures the core challenge of Human-Computer Interaction - a discord between how humans naturally think and how computers actually work. On one side, humans are unpredictable and independent – we solve problems creatively, change our minds, make typos, and jump between tasks based on intuition and context. On the other side, computers follow strict rules, producing the same output for the same input every time, unable to deviate from their programming or interpret what you probably meant.

When these two different behaviors meet, frustration emerges in both directions. Humans experience computers as rigid because the system won’t bend to accommodate natural human behavior like spelling mistakes, vague requests, or doing things out of order. Meanwhile, computers make humans feel limited because to get anything done, we must abandon our natural thinking and conform to the machine’s logic through specific menus, required fields, and fixed workflows.

This is known as the Gulf of Execution – the gap between how we want to accomplish something and how the computer demands we accomplish it. In essence, humans are creative and flexible while computers are orderly and inflexible, and when they interact, we feel constrained and the technology feels broken

The system barely has context outside of the transaction. It doesn’t know if you are booking a car for yourself or your family or if your previous booking failed. The second and third transactions also booked the car for me. The system thought I was renting three cars, all at the same time in two different locations. It is plausible but not probable. If I had called the company, they would have been able to book it in a single transaction and would have raised a doubt about the need for other two bookings.

Recall that I had to deal with three different experiences - the UI for reservations, then with the case support, and finally with the phone support. The failure of each experience led to the next with a changed goal. I started with wanting a cheap deal and ended up with a refund with no fines.

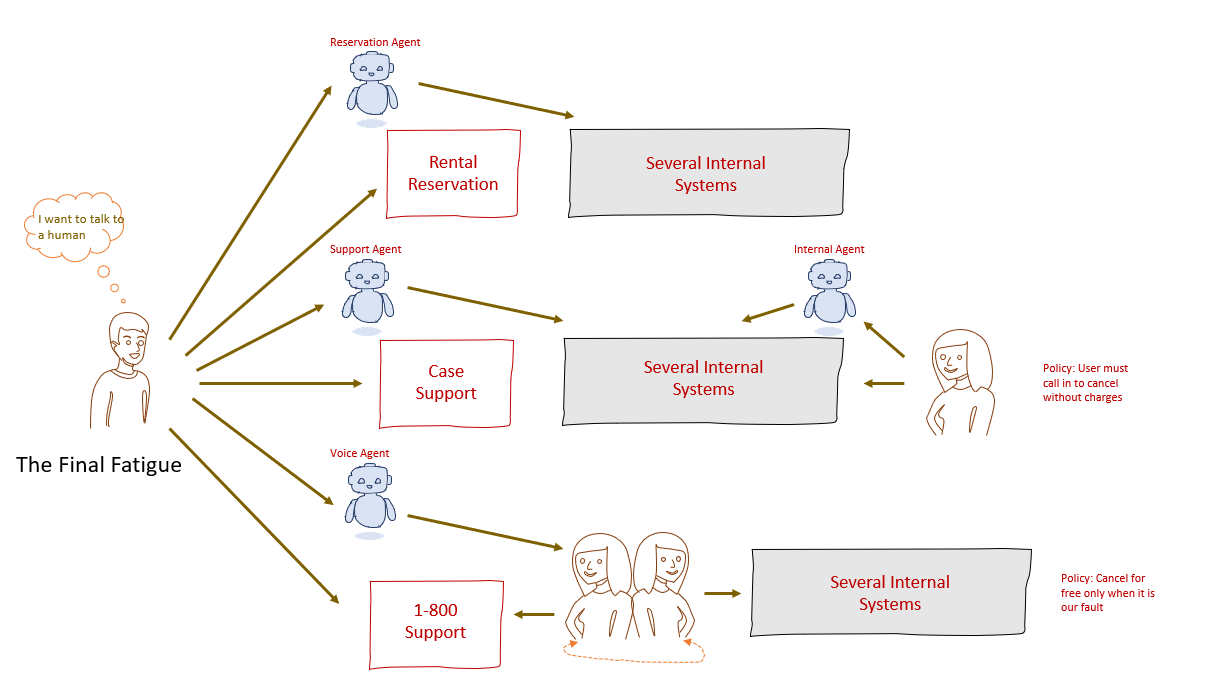

Could an agent (on the company’s side) overcome this? Let’s look into how most enterprises are ‘agentifying’ their processes. Agents are bolted on top of the old world and tools are strangled or embedded within the existing apps.

The agents attempt to bridge these disconnected systems but don’t replace or modernize them, creating additional layers of complexity rather than simplification. The friction intensifies through conflicting policies shown on the right: users must call to cancel without charges (forcing them from digital channels to voice), and cancellations are only free when it’s the company’s fault – a subjective determination that AI struggles with. This creates a classic “Deflection vs. Resolution” problem where the company deploys multiple agents to automate and deflect volume, but because these are superficial additions rather than systemic improvements, bureaucratic policies and complex rules that bots can’t handle force customers back toward human agents anyway, resulting in a high-effort, frustrating loop that satisfies no one.

Agent Native solution

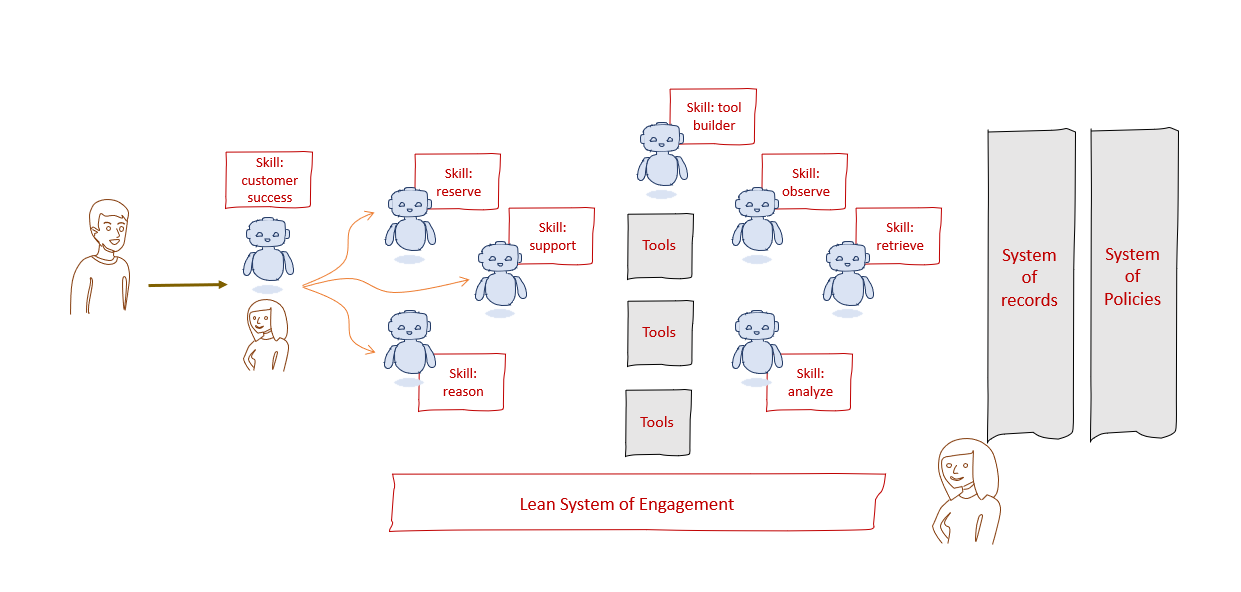

Imagine a rental startup that decided to build an AI Agent native solution from scratch.

In an agent-native architecture, a single Experience Agent would own the entire customer journey, mimicking a walk-in rental office experience. When you walk into a physical location and the first booking attempt fails, the sales representative doesn’t make you start over with someone else – they own the retry, they know you’re booking just one car, and they prevent duplicate charges because they have the full context.

An AI agent can absolutely replicate this accountability. When a booking fails, the Experience Agent wouldn’t punt to another channel or make the customer repeat themselves. It would own the retry with full context of what just happened. Through intelligent choreography, it could delegate specific tasks behind the scenes – a troubleshooting sub-agent could verify if the transaction actually went through despite the error message, a payment sub-agent could reverse any duplicate charges, and a booking sub-agent could complete the retry – all invisible to the customer. The Experience Agent maintains the conversation thread, the context, and most importantly, the accountability for resolution.

This isn’t about having fewer agents; it’s about building an agentic enterprise where an agent takes ownership while orchestrating specialized capabilities as needed, creating a truly seamless experience where the customer feels heard, understood, and taken care of – just like walking into that rental office where one person helps you from start to finish. There are no alternative rigid UI experiences at all.

Closing Thoughts

Despite all the years of digital transformation, most customer experiences are still fragmented by disconnected workflows, fuzzy policies, siloed data, and incompatible tools. When breakdowns occur, the customer often becomes responsible for responding and following up. This leads to repeated form fills, redundant explanations, navigating multiple interfaces, and reliance on someone with proper authority to handle problems the system cannot resolve.

The bolted AI agents don’t solve these. When integrated into the current system, they face the same challenges: no context, no authority, ambiguous policies, and no transition. Rather than making the experience better, they expedite the process leading to deadlocks. Chatbots, in particular, take the lead in not being able to handle issues. And worse, automation cuts costs but at the expense of increasing customer frustration.

The value of agentic systems lies in ownership, not just better interfaces or quicker transactions. An agent-led organization restores to fragmented processes what it lacks: a single entity that takes responsibility, truly knows the customer, brings continuity when something goes wrong, and sees it through to resolution. This approach does not demand system replacement overnight but rather a shift in accountability from individual processes to the customers’ experience.

If companies neglect to prioritize agent accountability in their design, customer experiences will remain mediocre regardless of technological developments. Achieving excellent service requires fewer excuses, clearer ownership, and systems that respond to people, rather than increasing the number of agents.

With this shift, I hope booking a car will no longer feel like interacting with software, but rather like receiving authentic help.